In a world advocating for AI, we advocate for humans

AI should enhance creativity, not replace it. StoryTribe empowers humans to create more, keeping art and storytelling in our hands.

Yunmie & Joe

StoryTribe Team

In this blog, I'll talk about some common problems people experience when using AI to generate visual content, and how a different approach could solve these problems.

Since leaving my full-time job two months ago, I’ve immersed myself in the startup community and engaged with numerous VCs while raising funding for StoryTribe. A question that frequently comes up is, 'AI can generate storyboards. Why should someone choose StoryTribe?’

And here’s why AI will never be able to compete.

Any AI-generated output requires human input, which I’d call 'Human-to-AI communication,' because AI can’t read your mind. Unless we all somehow decide to plug our brains directly into computers in the future (which is an interesting question in itself), there’s always going to be that need for humans to translate their thoughts into instructions for AI.

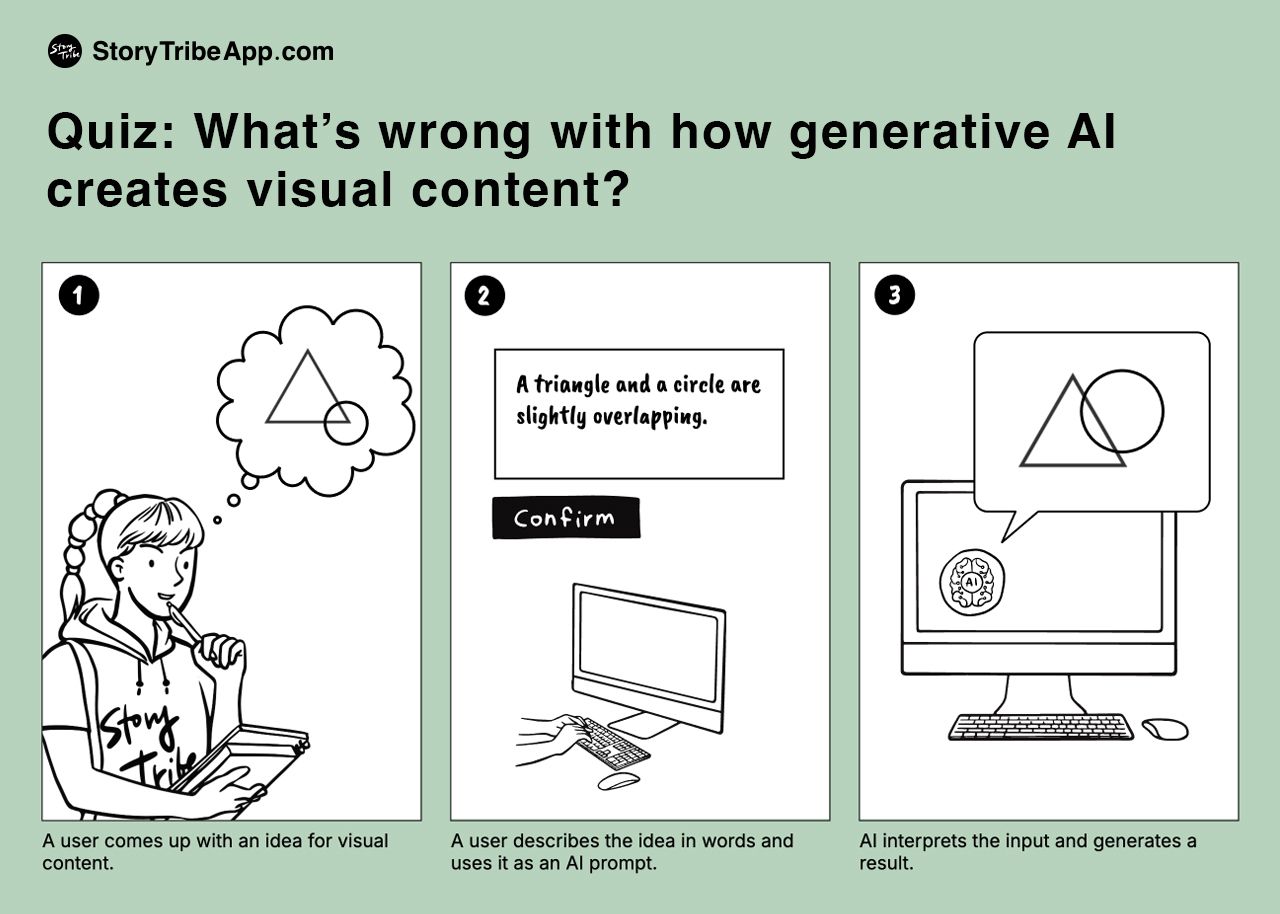

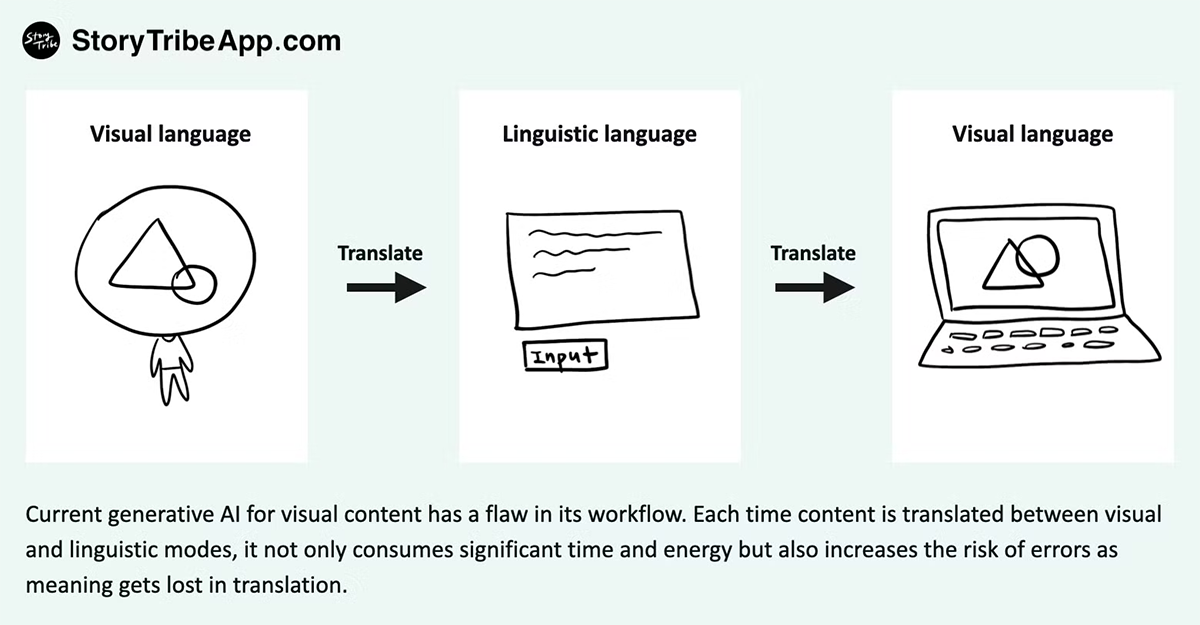

But current generative AI for visual content has a few flaws in its workflow. Let me explain this in a simple way.

When generating a scene in a storyboard, your brain first forms an idea or concept. This is much like your dreams — often ambiguous thoughts and feelings that are hard to describe in words. Since visual information is processed 600 times faster than words in our brains, these thoughts and ideas often turn into visuals before they do into words — almost automatically. Then, putting these into words takes more time and effort.

While you’re writing a prompt for the AI, you’re describing your thoughts in words. Then AI generates images based on its own interpretation of your prompt. This translation is inevitably shaped by generalised, common understandings derived from global data. But we don’t just have a few generalised thoughts — we have countless creative and unique ideas that the AI may miss.

So, things will get lost in translation, and this is perfectly normal. Even in human-to-human communication, we face similar challenges. You say something, and the other person interprets it based on their past experiences, making a guess about your meaning. There's a high chance of misunderstanding — something Wiio’s Law captures: 'Communication usually fails, except by accident.’

To achieve better results, you need a dialogue instead of one-way communication — where the AI can ask for clarification, and you can refine the details, providing more specific instructions as you go. The current system doesn’t allow this kind of back-and-forth or 'editing.' Each time you provide a follow-up prompt, the AI treats it as a new instruction, regenerating the entire image from scratch, resetting all the good details that had already been developed.

So to summarise, current generative AI for visual content has a few flaws in its workflow because of;

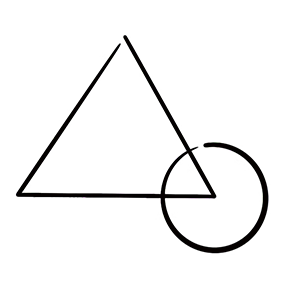

Let’s visualise the workflow described above with a simple example. Imagine you have this image below in your mind that you want to bring into your storyboard. I deliberately picked a very simple drawing without any characters to make a point.

The first step would be translating this visual idea into a linguistic description. So you might start with something like, 'A sketched triangle and circle are slightly overlapping.'

Now, the image below is what I got from my attempt with DALL-E.

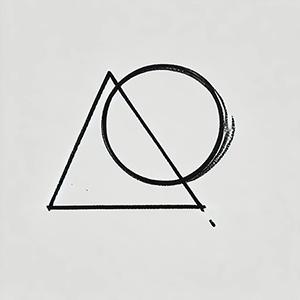

It’s close, but not exactly what I wanted. With this workflow, I can’t edit or move any of the elements, so I’d need to try again with a new description. The next prompt might be something like, 'The triangle is much bigger than the circle, positioned to the left, with the circle to the right and slightly overlapping the bottom-right corner of the triangle.'

Note that coming up with this additional detail and describing it in words would take a lot of effort, time, and brainpower. What would make it easier is if I could simply edit the elements — resize the triangle and circle, adjust their positions, and make changes directly without needing to rewrite the entire prompt.

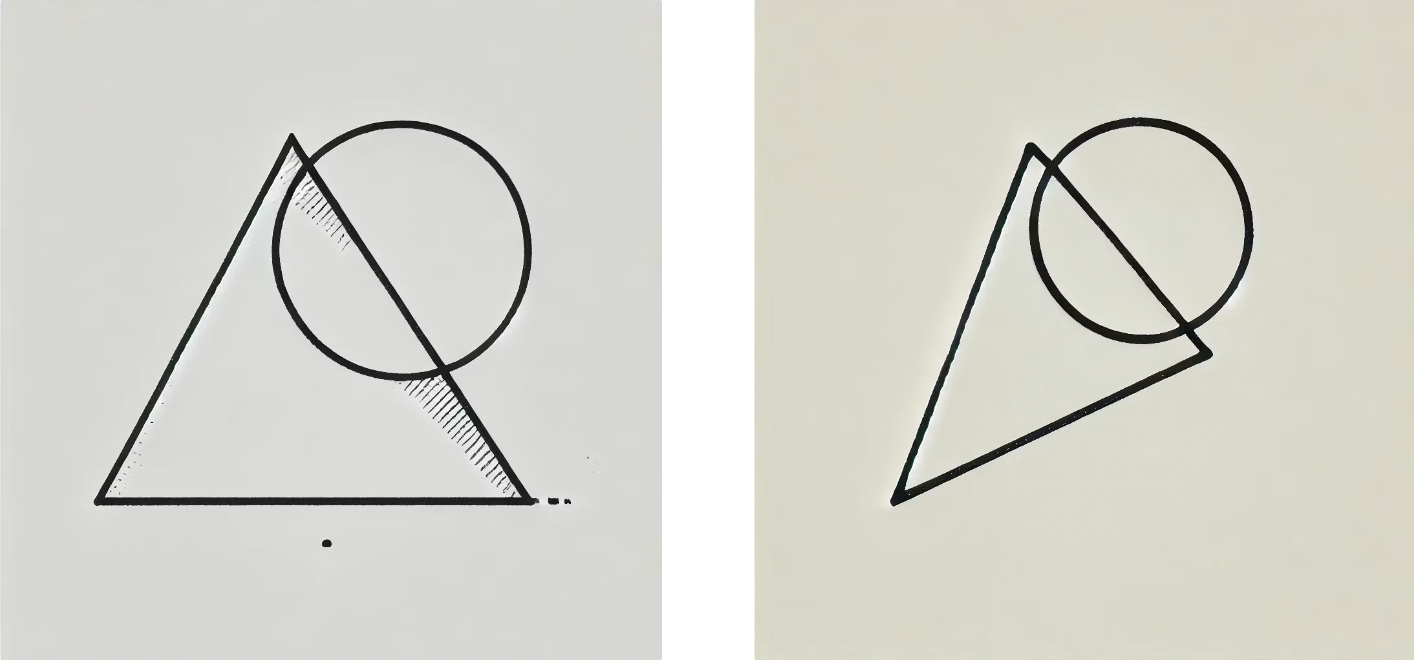

Nevertheless, I tried using this detailed description with DALL-E and got the images below on my second and third attempts. The next 10 attempts were somewhat similar, but they were not getting any closer, so I gave up.

This was just a simple example. So, now, imagine generating a real-life storyboard with characters, props and backgrounds. That would involve elements like pose, emotion, movement, location, scale, angle, framing, and more. The complexity increases dramatically.

This storyboard is for the iconic scene from the movie Parasite, drawn by the director Bong Joon-ho himself. To generate this scene using AI, you’d have to describe it in detail - such as 'Ki-taek looks back over his shoulder with a surprised expression, holding a crumpled tissue with a spot of blood. The frame cuts at chest level...' and so on. Coming up with this level of description takes a lot of energy, and there’s plenty of room for miscommunication.

Below is what DALL-E generated based on this prompt. It’s close, but many details are off. To make it correct, we’ll need to refine details such as the man’s head angle, subtle details in his facial expression, hand and arm position, and so on. Now imagine having to describe every change in words. This could take hundreds of prompts. I hope you see my point.

I'm sure the AI will improve in handling details over time as the technology matures. Especially with the current speed of development, AI will soon generate more accurate images after just a few prompts, rather than needing hundreds of attempts as it does today.

But the issue lies in the time and effort it takes to get to final output. The process involves two mode changes: first, translating the visual idea into language, which requires significant effort from the human side, and then the computer translating those words back into visuals, which leaves a lot of room for error — things get ‘lost in translation’.

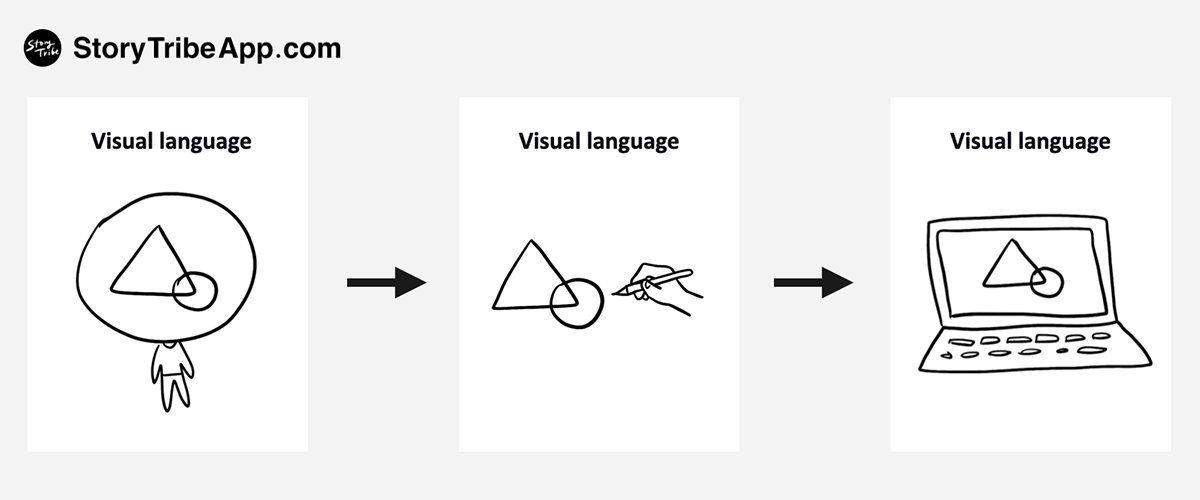

To create a better experience, the need for language mode switch should be minimised (between visual and linguistic mode). Additionally, elements should be editable, giving users more control to create unique visuals rather than relying solely on generalised AI output. This process should feel more like a conversation than a one-way exchange. In this dialogue, users can iterate by continuously refining and feeding their feedback back to the AI, allowing for each interaction to further enhance and personalise their visuals.

The best way to remove this language mode switch is drawing. When you have an image in your head, you simply sketch out without having to spell it, and let AI take it further. Then, you can have a visual dialogue with the AI by adding more drawn details to the existing visual output, giving the AI clearer guidance along the way.

The problem is that most people don’t feel confident in their drawing skills. When I say 'drawing,' I don’t mean intricate, expert-level illustrations — just simple, doodle-style sketches or basic pencil drawings, which I believe almost everyone is capable of. The issue is that many of us grow up feeling inadequate at it. People are embarrassed by their stick figure drawings and shy away from drawing more. As a result, they miss the chance to improve because they stop practicing.

My vision for StoryTribe is to solve this very problem, and empower people to realise they can draw. We will provide ready-made elements that users can easily piece together, and make it easier for users to add their own personal touches, so they can create their own drawings.

This approach encourages people to draw more because, with a bit of help from the asset library, it becomes easier to create a drawing they’re satisfied with. It also makes the process more enjoyable, and as they find joy in it, they’ll naturally draw more often. Over time, they’ll improve without even realising it. This is exactly how I learned to draw — I improved by simply doing it, and by enjoying the process.

I want StoryTribe to inspire everyone to draw more, making it effortless and enjoyable for people to bring their ideas to life on a canvas. Drawing should be as natural as going for a run or listening to music. It’s a basic skill for everyone, and everyone should benefit from it. Yet, for some reason, this isn’t the reality, and I want to change that.

StoryTribe is, of course, still in its infancy, and it will take time to fully realise the vision. Having said that, we've already reached 100k users in just six months with no marketing costs, showing that users are already seeing the value of the tool.

What makes StoryTribe unique is its customisable nature. Users have control over the details — choosing what to add, where to place it, adjusting size, angles, and more. It also embraces the power of lo-fi and simplicity (simple line drawing, no colour), making it more approachable and easier to complete. This intentional design choice also helps users focus more on the idea and story, rather than getting caught up in the final look and feel. (We’ll dive into the power of lo-fi in another blog!)

StoryTribe is not against AI. In fact, AI features are in our roadmap. I see StoryTribe and AI working together as companions, achieving a more interactive, dialogue-like form of human-computer communication for visual content.

AI will continue to advance, but as human beings, we have an innate drive for creativity — we naturally want to create our own unique things, to be different, and to stand out. It’s about having something that’s ours, something that reflects our own identity. So, even though AI is there as a tool, it’s the human creativity that ultimately should shape the final outcome.

AI should enhance creativity, not replace it. StoryTribe empowers humans to create more, keeping art and storytelling in our hands.

Yunmie & Joe

StoryTribe Team

In a world where attention spans are shrinking and visuals dominate our screens, the right technique for visual communication has become more crucial. Here’s why StoryTribe aims to redefine how we share and understand ideas.

Yunmie Kim

Multimedia Specialist

StoryTribe empowers users to visually communicate their ideas through simple, lo-fi storyboarding. Its versatility, however, goes beyond traditional storyboarding, challenging conventional definitions of the term.

Yunmie Kim

Multimedia Specialist